Exploring the Potential of GNN in TensorFlow: A Deep Dive into Graph Neural Networks

The Power of Graph Neural Networks (GNN) in TensorFlow

Graph Neural Networks (GNNs) have emerged as a powerful tool for analysing and learning from graph-structured data. In the realm of machine learning, GNNs have shown great promise in various applications such as social network analysis, recommendation systems, and bioinformatics.

TensorFlow, one of the most popular deep learning frameworks, provides robust support for implementing GNNs. With TensorFlow’s flexible architecture and high-performance computation capabilities, developers can easily build and train complex GNN models to tackle real-world problems.

One of the key advantages of using GNN in TensorFlow is its ability to capture intricate relationships and dependencies within graph data. By leveraging message passing techniques, GNNs can effectively propagate information across nodes in a graph, enabling them to learn powerful representations that capture the underlying structure of the data.

Furthermore, TensorFlow’s extensive library of tools and resources simplifies the process of designing and training GNN models. From graph convolutional layers to graph attention mechanisms, TensorFlow offers a wide range of building blocks that empower developers to create sophisticated GNN architectures tailored to their specific needs.

Whether you are a researcher exploring innovative graph-based algorithms or a practitioner looking to enhance your machine learning pipelines with graph analytics, incorporating GNN into your TensorFlow workflow can unlock new possibilities and insights.

As the field of graph neural networks continues to evolve, TensorFlow remains at the forefront of enabling cutting-edge research and applications in this exciting domain. By harnessing the power of GNN in TensorFlow, you can embark on a journey towards unlocking the full potential of graph-based learning.

Exploring Graph Neural Networks in TensorFlow: Key Concepts, Benefits, and Applications

- What is a Graph Neural Network (GNN) in the context of TensorFlow?

- How can Graph Neural Networks benefit machine learning tasks in TensorFlow?

- What are the key components of implementing a GNN in TensorFlow?

- Are there any pre-built GNN models available in TensorFlow for quick deployment?

- What are some popular applications of Graph Neural Networks in TensorFlow?

- How does message passing work in the context of GNNs and TensorFlow?

- What resources or tutorials are recommended for learning to use GNNs in TensorFlow?

- Can GNNs be combined with other neural network architectures within TensorFlow?

What is a Graph Neural Network (GNN) in the context of TensorFlow?

A Graph Neural Network (GNN) in the context of TensorFlow refers to a powerful deep learning model specifically designed to handle graph-structured data. GNNs are adept at capturing complex relationships and dependencies within graphs by leveraging techniques such as message passing and graph convolutions. In TensorFlow, GNNs are implemented using a variety of graph neural network layers and modules that enable the model to learn meaningful representations from the input graph data. By utilising TensorFlow’s computational capabilities and flexible architecture, developers can easily construct and train GNN models to tackle a wide range of tasks, from social network analysis to recommendation systems. Overall, GNNs in TensorFlow offer a versatile and efficient framework for processing and extracting valuable insights from graph data.

How can Graph Neural Networks benefit machine learning tasks in TensorFlow?

Graph Neural Networks (GNNs) offer a transformative approach to machine learning tasks in TensorFlow by harnessing the power of graph structures. One key advantage of GNNs is their ability to capture complex relationships and dependencies within graph data, making them particularly effective for tasks involving interconnected data points. In TensorFlow, GNNs can benefit machine learning tasks by enabling the modelling of intricate graph structures and facilitating information propagation across nodes. This capability allows GNNs to learn rich representations that encapsulate the underlying patterns and features of the data, ultimately enhancing the performance and accuracy of machine learning models. By leveraging GNNs in TensorFlow, developers can unlock new insights and capabilities for a wide range of applications, from social network analysis to recommendation systems and beyond.

What are the key components of implementing a GNN in TensorFlow?

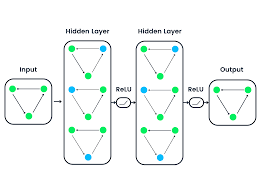

When implementing a Graph Neural Network (GNN) in TensorFlow, several key components play a crucial role in building an effective model. Firstly, defining the graph structure and node features is essential to represent the data accurately. Next, incorporating graph convolutional layers that perform message passing between nodes helps capture relational information within the graph. Additionally, utilising activation functions and pooling operations can enhance the model’s non-linear capabilities and scalability. Furthermore, attention mechanisms and edge features can be integrated to improve the GNN’s ability to focus on relevant nodes and edges during training. Lastly, optimisation techniques such as gradient descent algorithms and regularisation methods are vital for training the GNN efficiently and preventing overfitting. By carefully considering these key components when implementing a GNN in TensorFlow, developers can create robust models capable of learning complex patterns from graph-structured data effectively.

Are there any pre-built GNN models available in TensorFlow for quick deployment?

When exploring the realm of Graph Neural Networks (GNN) in TensorFlow, a common query arises: “Are there any pre-built GNN models available for quick deployment?” Fortunately, TensorFlow offers a variety of pre-built GNN models and modules through its extensive library, empowering users to swiftly deploy and utilise these models for their specific tasks. From graph convolutional networks to graph attention mechanisms, TensorFlow provides a rich collection of ready-to-use GNN architectures that streamline the process of incorporating graph-based learning into machine learning workflows. By leveraging these pre-built models in TensorFlow, users can expedite their development process and focus on harnessing the power of GNNs for diverse applications.

What are some popular applications of Graph Neural Networks in TensorFlow?

Graph Neural Networks (GNNs) in TensorFlow have found widespread applications across various domains due to their ability to model complex relationships within graph-structured data. Some popular applications of GNNs in TensorFlow include social network analysis, where GNNs can uncover community structures and predict connections between users. In recommendation systems, GNNs can leverage user-item interactions to provide personalised recommendations. Additionally, in bioinformatics, GNNs have been used for protein interaction prediction and drug discovery. By harnessing the power of GNNs in TensorFlow, researchers and practitioners can unlock new insights and solutions in diverse fields through the analysis of graph data.

How does message passing work in the context of GNNs and TensorFlow?

Message passing in the context of Graph Neural Networks (GNNs) and TensorFlow plays a crucial role in enabling nodes within a graph to exchange information and update their representations based on the information received from neighbouring nodes. In GNNs, message passing involves propagating messages along the edges of the graph, allowing nodes to aggregate and combine information from their neighbours. TensorFlow provides a framework for implementing efficient message passing mechanisms by defining custom operations that facilitate the communication between nodes in a graph. By leveraging TensorFlow’s computational capabilities, developers can design sophisticated GNN architectures that effectively capture the relational structure of graph data through iterative message passing iterations.

What resources or tutorials are recommended for learning to use GNNs in TensorFlow?

For those seeking to delve into the realm of Graph Neural Networks (GNNs) within TensorFlow, a common query arises: “What resources or tutorials are recommended for learning to use GNNs in TensorFlow?” Fortunately, there is a wealth of valuable resources available to aid in mastering the intricacies of GNN implementation in TensorFlow. From official TensorFlow documentation and online tutorials to community forums and specialised courses, aspiring learners can access a plethora of materials tailored to various skill levels. By exploring these recommended resources, individuals can gain a comprehensive understanding of GNN concepts and techniques while honing their skills in utilising TensorFlow for graph-based machine learning tasks.

Can GNNs be combined with other neural network architectures within TensorFlow?

In TensorFlow, Graph Neural Networks (GNNs) can indeed be seamlessly integrated with other neural network architectures to create more powerful and versatile models. By combining GNNs with traditional neural network layers such as convolutional neural networks (CNNs) or recurrent neural networks (RNNs), developers can leverage the strengths of each architecture to tackle complex tasks that involve both structured and unstructured data. This fusion of GNNs with other neural network components within TensorFlow enables researchers and practitioners to build hybrid models that exploit the complementary capabilities of different architectures, leading to enhanced performance and richer representations in a wide range of applications.