Unveiling the Power of Text Analysis in Data Science

Exploring Text Analysis in Data Science

Data science has revolutionized the way we extract insights from data, and text analysis is a crucial component of this field. Text analysis involves the process of deriving high-quality information from text data through various techniques and tools.

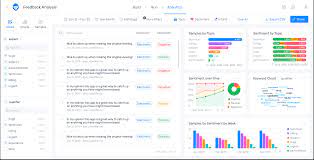

Text analysis in data science encompasses a wide range of tasks, including sentiment analysis, topic modelling, text classification, and more. By applying advanced algorithms and machine learning models to textual data, data scientists can uncover valuable patterns and trends that can inform decision-making processes.

Sentiment analysis, for example, allows businesses to understand customer opinions and feedback by analysing text data from social media, reviews, and surveys. This valuable insight can help companies improve their products and services based on customer preferences and sentiments.

Topic modelling is another powerful text analysis technique that helps identify common themes or topics within a large collection of documents. This can be particularly useful for organising and structuring unstructured textual data such as news articles, research papers, or customer reviews.

Text classification involves categorising text documents into predefined categories based on their content. This can be applied in various domains such as spam detection in emails, sentiment classification in social media posts, or automatic tagging of news articles.

Overall, text analysis plays a critical role in extracting meaningful insights from textual data in the field of data science. By leveraging advanced techniques and tools for analysing text data, organisations can gain a competitive edge by making informed decisions based on comprehensive textual information.

Essential Tips for Effective Text Analysis in Data Science

- Preprocess text data by removing punctuation and special characters.

- Tokenize the text into words or phrases for analysis.

- Remove stop words like ‘and’, ‘the’, ‘is’ to focus on meaningful words.

- Perform stemming or lemmatization to reduce words to their base form.

- Use techniques like TF-IDF or word embeddings to represent text data numerically.

- Explore different machine learning models such as Naive Bayes or SVM for classification tasks.

- Consider using topic modelling algorithms like LDA for identifying themes in text data.

- Evaluate model performance using metrics like accuracy, precision, recall, and F1-score.

- Visualise results with tools like word clouds, bar charts, and confusion matrices.

Preprocess text data by removing punctuation and special characters.

In the realm of text analysis data science, a fundamental tip is to preprocess text data by eliminating punctuation and special characters. By stripping away these extraneous elements from the textual data, data scientists can enhance the accuracy and efficiency of their analysis. Removing punctuation and special characters helps streamline the text processing pipeline, allowing algorithms to focus on the core content of the text without being distracted by irrelevant symbols. This preprocessing step lays a solid foundation for extracting meaningful insights and patterns from textual data, enabling more effective text analysis in the field of data science.

Tokenize the text into words or phrases for analysis.

In the realm of text analysis in data science, a fundamental tip is to tokenize the text into words or phrases before conducting analysis. Tokenization involves breaking down the text into individual units, such as words or phrases, which are then used as building blocks for further analysis. By tokenizing the text, data scientists can effectively process and analyse textual data to extract valuable insights and patterns. This initial step of tokenization lays the foundation for more advanced text analysis techniques, enabling a deeper understanding of the underlying content and structure of the text data.

Remove stop words like ‘and’, ‘the’, ‘is’ to focus on meaningful words.

In text analysis data science, a useful tip is to remove stop words such as ‘and’, ‘the’, and ‘is’ from textual data. By eliminating these common words that do not carry significant meaning, data scientists can focus on extracting and analysing the more meaningful words that convey important information and insights. This process of removing stop words helps streamline the text analysis process and allows for a more accurate and relevant analysis of the underlying content.

Perform stemming or lemmatization to reduce words to their base form.

Performing stemming or lemmatization is a crucial step in text analysis data science as it helps reduce words to their base form, thereby simplifying the text processing and improving the accuracy of analysis. Stemming involves cutting off prefixes or suffixes of words to extract their root form, while lemmatization involves reducing words to their dictionary form. By applying these techniques, data scientists can standardise the text data and enhance the efficiency of natural language processing tasks such as sentiment analysis, topic modelling, and text classification.

Use techniques like TF-IDF or word embeddings to represent text data numerically.

In the realm of text analysis in data science, a valuable tip is to utilise techniques such as TF-IDF (Term Frequency-Inverse Document Frequency) or word embeddings to represent text data numerically. By employing these methods, textual information can be transformed into numerical vectors that capture the semantic meaning and relationships between words. TF-IDF assigns weights to words based on their frequency in a document and across a corpus, while word embeddings map words into dense vector representations in a continuous space. These numerical representations enable data scientists to perform various analytical tasks efficiently, such as clustering, classification, and information retrieval, enhancing the depth and accuracy of insights extracted from text data.

Explore different machine learning models such as Naive Bayes or SVM for classification tasks.

In the realm of text analysis data science, it is highly beneficial to explore a variety of machine learning models, such as Naive Bayes or Support Vector Machines (SVM), for classification tasks. These models offer distinct approaches to categorising text data into predefined classes based on their features and characteristics. By experimenting with different machine learning algorithms like Naive Bayes or SVM, data scientists can enhance the accuracy and efficiency of text classification tasks, ultimately leading to more precise insights and informed decision-making processes in data analysis.

Consider using topic modelling algorithms like LDA for identifying themes in text data.

When delving into text analysis in data science, it is advisable to explore the use of topic modelling algorithms such as Latent Dirichlet Allocation (LDA) for uncovering underlying themes within textual data. By employing LDA, data scientists can efficiently identify and extract key topics from a corpus of text documents, enabling a deeper understanding of the content and facilitating insightful analysis. This approach can be particularly valuable in tasks such as document clustering, information retrieval, and content recommendation, enhancing the effectiveness of text analysis processes in deriving meaningful insights from unstructured data.

Evaluate model performance using metrics like accuracy, precision, recall, and F1-score.

When delving into text analysis in data science, it is essential to evaluate the performance of models using key metrics such as accuracy, precision, recall, and F1-score. These metrics provide valuable insights into the effectiveness and reliability of the text analysis models. Accuracy measures the overall correctness of predictions, while precision focuses on the proportion of correctly predicted positive instances out of all predicted positive instances. Recall, on the other hand, assesses the proportion of correctly predicted positive instances out of all actual positive instances. The F1-score combines both precision and recall into a single metric, offering a balanced evaluation of model performance. By carefully analysing these metrics, data scientists can fine-tune their text analysis models for optimal results and decision-making processes.

Visualise results with tools like word clouds, bar charts, and confusion matrices.

Visualising results is a key aspect of text analysis in data science, as it allows for a more intuitive understanding of the patterns and insights extracted from textual data. Tools such as word clouds, bar charts, and confusion matrices provide data scientists with effective ways to present their findings in a visually engaging manner. Word clouds offer a quick overview of the most frequently occurring words in a text corpus, highlighting key themes or topics. Bar charts can be used to compare word frequencies or sentiment scores across different categories, providing valuable insights at a glance. Confusion matrices, on the other hand, are essential for evaluating the performance of text classification models by visualising the true positive, true negative, false positive, and false negative predictions. By utilising these visualisation tools effectively, data scientists can communicate their text analysis results more effectively and make informed decisions based on the extracted insights.