Unleashing the Potential of Deep Learning: Exploring the Boundaries of AI

The Power of Deep Learning

Deep learning is a subset of machine learning that has gained significant attention in recent years due to its ability to tackle complex problems and deliver impressive results. It is a type of artificial intelligence that mimics the way the human brain works, allowing machines to learn from data and make decisions without being explicitly programmed.

One of the key strengths of deep learning is its capability to process vast amounts of data and extract meaningful patterns and insights. This makes it particularly effective in tasks such as image recognition, natural language processing, speech recognition, and more.

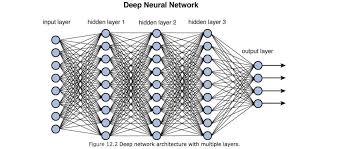

Neural networks are at the core of deep learning algorithms. These networks consist of layers of interconnected nodes that process information in a hierarchical manner. Through a process known as backpropagation, neural networks can adjust their parameters to improve their performance over time.

Deep learning has revolutionised many industries, including healthcare, finance, marketing, and more. In healthcare, deep learning algorithms are being used to diagnose diseases from medical images with high accuracy. In finance, they are used for fraud detection and risk assessment.

Despite its impressive capabilities, deep learning also faces challenges such as the need for large amounts of labelled data, computational resources, and interpretability issues. Researchers are continuously working on overcoming these challenges to further enhance the capabilities of deep learning models.

In conclusion, deep learning is a powerful tool that has the potential to transform industries and drive innovation across various domains. As technology continues to advance, we can expect deep learning to play an increasingly important role in shaping the future.

Eight Essential Tips for Mastering Deep Learning Techniques

- Start with a good understanding of the basics of machine learning

- Choose the right deep learning framework for your project

- Preprocess and clean your data effectively before training your model

- Experiment with different network architectures to find the best one for your task

- Regularise your model to prevent overfitting

- Monitor and fine-tune hyperparameters regularly for optimal performance

- Use transfer learning when applicable to leverage pre-trained models

- Stay updated with the latest research and advancements in deep learning

Start with a good understanding of the basics of machine learning

In the realm of deep learning, it is crucial to begin by establishing a solid foundation in the fundamentals of machine learning. By grasping key concepts such as algorithms, model training, and data preprocessing, one can build a strong framework upon which to delve into the complexities of deep neural networks. Understanding the basics of machine learning not only provides a clear pathway for mastering deep learning techniques but also equips individuals with the essential knowledge needed to navigate and innovate within this dynamic field.

Choose the right deep learning framework for your project

Selecting the appropriate deep learning framework for your project is crucial in ensuring its success and efficiency. Different frameworks offer varying levels of flexibility, performance, and ease of use, so it is essential to evaluate your project requirements carefully before making a decision. Consider factors such as community support, compatibility with your existing infrastructure, and the specific features offered by each framework to make an informed choice that aligns with your project goals and objectives.

Preprocess and clean your data effectively before training your model

In deep learning, it is crucial to preprocess and clean your data effectively before training your model. Data preprocessing involves tasks such as handling missing values, normalising the data, and encoding categorical variables to ensure that the data is in a suitable format for the model to learn from. Cleaning the data by removing outliers, correcting errors, and standardising the features can help improve the model’s performance and accuracy. By ensuring that your data is well-prepared and free from inconsistencies, you can enhance the efficiency of your deep learning model and achieve more reliable results.

Experiment with different network architectures to find the best one for your task

When delving into the realm of deep learning, it is crucial to experiment with various network architectures to determine the most optimal one for your specific task. Different tasks may require different neural network structures to achieve the best results. By exploring and testing different architectures, you can uncover the one that suits your task’s requirements, maximising performance and efficiency in your deep learning endeavours.

Regularise your model to prevent overfitting

Regularising your model is a crucial step in deep learning to prevent overfitting. Overfitting occurs when a model learns the details and noise in the training data to the extent that it negatively impacts its performance on unseen data. By incorporating regularisation techniques such as L1 or L2 regularization, dropout, or early stopping, you can help your model generalise better and improve its ability to make accurate predictions on new data. Regularising your model ensures that it focuses on learning relevant patterns rather than memorising the training data, ultimately leading to more robust and reliable deep learning models.

Monitor and fine-tune hyperparameters regularly for optimal performance

In the realm of deep learning, it is crucial to monitor and fine-tune hyperparameters regularly to achieve optimal performance. Hyperparameters play a significant role in determining the effectiveness of a deep learning model, influencing factors such as convergence speed and overall accuracy. By regularly adjusting these parameters based on performance feedback, practitioners can fine-tune their models to operate at peak efficiency, ultimately enhancing the model’s ability to learn and make accurate predictions. This iterative process of monitoring and adjusting hyperparameters is essential for maximising the potential of deep learning algorithms and achieving superior results in various applications.

Use transfer learning when applicable to leverage pre-trained models

In deep learning, a valuable tip is to utilise transfer learning when appropriate to take advantage of pre-trained models. Transfer learning involves using knowledge gained from solving one problem and applying it to a different but related problem. By leveraging pre-trained models, which have already learned features from vast datasets, developers can significantly speed up the training process and improve the performance of their own models. This approach not only saves time and computational resources but also enhances the accuracy and efficiency of deep learning applications in various domains.

Stay updated with the latest research and advancements in deep learning

Staying updated with the latest research and advancements in deep learning is crucial for anyone looking to harness the full potential of this cutting-edge technology. The field of deep learning is rapidly evolving, with new algorithms, techniques, and applications being developed constantly. By keeping abreast of the latest trends and breakthroughs in deep learning, professionals and enthusiasts can stay ahead of the curve, adapt their strategies, and incorporate innovative solutions into their projects. Continuous learning and staying informed about the latest developments ensure that individuals can leverage the most effective tools and methodologies to achieve optimal results in their deep learning endeavours.