NVIDIA Machine Learning: Transforming the Future of Technology

NVIDIA Machine Learning: Revolutionising AI

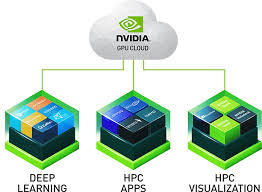

In recent years, NVIDIA has emerged as a pivotal player in the field of machine learning and artificial intelligence (AI). Known primarily for its graphics processing units (GPUs), NVIDIA has leveraged its expertise to make significant advancements in machine learning, transforming industries and driving innovation.

The Power of GPUs in Machine Learning

Machine learning algorithms require immense computational power to process large datasets and perform complex calculations. Traditional central processing units (CPUs) often struggle with these demands, leading to slower processing times. This is where NVIDIA’s GPUs come into play.

GPUs are designed to handle parallel processing tasks efficiently, making them ideal for the intensive computations required in machine learning. By utilising thousands of cores, GPUs can perform multiple calculations simultaneously, significantly accelerating the training and inference processes of machine learning models.

NVIDIA CUDA: A Game Changer

A key innovation from NVIDIA is the Compute Unified Device Architecture (CUDA). CUDA is a parallel computing platform and programming model that allows developers to harness the power of NVIDIA GPUs for general-purpose processing. With CUDA, developers can write software that performs complex mathematical operations at high speeds, making it an essential tool for machine learning applications.

NVIDIA Tensor Cores: Boosting Performance

To further enhance the capabilities of its GPUs, NVIDIA introduced Tensor Cores in its Volta architecture. Tensor Cores are specialised hardware units designed specifically for deep learning tasks. They accelerate matrix multiplications, a fundamental operation in neural networks, providing significant performance improvements over traditional GPU architectures.

With Tensor Cores, researchers and engineers can train more sophisticated models faster than ever before. This breakthrough has been instrumental in advancing state-of-the-art AI applications such as natural language processing, computer vision, and autonomous systems.

NVIDIA Deep Learning SDKs

NVIDIA offers a comprehensive suite of software development kits (SDKs) tailored for deep learning and machine learning applications. These SDKs provide developers with pre-optimised libraries and tools to streamline the development process and maximise performance on NVIDIA hardware.

- CUDNN: A GPU-accelerated library for deep neural networks that provides highly optimised implementations of forward and backward convolutional routines.

- TENSORRT: A high-performance deep learning inference optimiser that enables real-time AI applications by maximising throughput and minimising latency.

- DIGITS: An interactive deep learning training system that simplifies data preparation, model training, and visualisation tasks.

Applications Across Industries

The impact of NVIDIA’s machine learning technology extends across various industries:

- Healthcare: Accelerating medical research with faster data analysis and improving diagnostic accuracy through advanced imaging techniques.

- Automotive: Enabling autonomous driving by providing real-time object detection and decision-making capabilities.

- Finance: Enhancing fraud detection systems and optimising trading strategies through predictive analytics.

- Agriculture: Improving crop yield predictions and automating farming processes using computer vision technologies.

The Future of Machine Learning with NVIDIA

NVIDIA continues to push the boundaries of what is possible with machine learning. The company’s dedication to innovation ensures that its hardware remains at the forefront of AI research and development. As new challenges arise in fields such as natural language understanding, robotics, and personalised medicine, NVIDIA’s solutions will undoubtedly play a critical role in overcoming them.

The collaboration between researchers, developers, and industry leaders facilitated by NVIDIA’s ecosystem fosters an environment where groundbreaking advancements are made every day. As we look towards the future, it is clear that NVIDIA will remain a driving force behind the evolution of machine learning technology.

Top 5 Advantages of NVIDIA Machine Learning Technology

- NVIDIA GPUs offer exceptional parallel processing power, accelerating machine learning tasks.

- CUDA technology enables developers to harness the full potential of NVIDIA GPUs for general-purpose computing.

- Tensor Cores in NVIDIA GPUs significantly boost performance for deep learning tasks.

- NVIDIA provides a range of deep learning SDKs that streamline development and maximise performance on their hardware.

- NVIDIA’s machine learning technology has diverse applications across industries, from healthcare to finance.

Challenges of Nvidia Machine Learning: Cost, Power Consumption, and Complexity

NVIDIA GPUs offer exceptional parallel processing power, accelerating machine learning tasks.

NVIDIA GPUs provide exceptional parallel processing power, which significantly accelerates machine learning tasks. By leveraging thousands of cores to perform multiple calculations simultaneously, these GPUs can handle the intensive computational demands of machine learning algorithms far more efficiently than traditional CPUs. This capability not only speeds up the training and inference processes but also enables the development of more complex and sophisticated models. Consequently, researchers and developers can achieve faster results, iterate more quickly on their designs, and ultimately push the boundaries of what is possible in artificial intelligence and machine learning applications.

CUDA technology enables developers to harness the full potential of NVIDIA GPUs for general-purpose computing.

One of the key advantages of NVIDIA’s machine learning technology lies in its CUDA technology, which empowers developers to unlock the full potential of NVIDIA GPUs for general-purpose computing. By leveraging CUDA, developers can tap into the parallel processing capabilities of NVIDIA GPUs, enabling them to perform complex calculations and process large datasets efficiently. This not only accelerates the training and inference processes of machine learning models but also opens up new possibilities for harnessing the computational power of GPUs in a wide range of applications beyond graphics processing.

Tensor Cores in NVIDIA GPUs significantly boost performance for deep learning tasks.

Tensor Cores in NVIDIA GPUs significantly enhance performance for deep learning tasks by accelerating the computational processes involved in neural network training and inference. These specialised hardware units are designed to handle the complex matrix multiplications that are fundamental to deep learning algorithms, enabling faster and more efficient processing. As a result, Tensor Cores allow researchers and developers to train larger, more sophisticated models in less time, thereby expediting the development of cutting-edge AI applications. This performance boost is crucial for real-time tasks such as image recognition, natural language processing, and autonomous driving, where speed and accuracy are paramount.

NVIDIA provides a range of deep learning SDKs that streamline development and maximise performance on their hardware.

NVIDIA offers a diverse selection of deep learning software development kits (SDKs) that are tailored to simplify the development process and optimise performance on their hardware. These SDKs, including CUDNN, TENSORRT, and DIGITS, provide developers with pre-optimised libraries and tools that enhance the efficiency of deep learning applications. By leveraging NVIDIA’s SDKs, developers can accelerate their projects, improve model training processes, and achieve superior performance on NVIDIA GPUs, ultimately driving innovation in the field of machine learning.

NVIDIA’s machine learning technology has diverse applications across industries, from healthcare to finance.

NVIDIA’s machine learning technology boasts a wide array of applications across various industries, significantly enhancing efficiency and innovation. In healthcare, it accelerates medical research by enabling faster data analysis and improving diagnostic accuracy through advanced imaging techniques. The finance sector benefits from NVIDIA’s technology by optimising trading strategies and bolstering fraud detection systems with predictive analytics. Moreover, in the automotive industry, it plays a crucial role in enabling autonomous driving with real-time object detection and decision-making capabilities. From agriculture to personalised medicine, NVIDIA’s machine learning solutions are revolutionising traditional processes, driving progress, and opening new avenues for technological advancements across diverse fields.

Cost

One significant drawback of NVIDIA machine learning is the cost associated with their GPUs. While these GPUs are undeniably powerful and highly efficient for machine learning tasks, their high price can be a considerable barrier. Purchasing and maintaining NVIDIA GPUs can be prohibitively expensive, particularly for individuals or organisations operating on limited budgets. This financial hurdle may prevent smaller entities from accessing the advanced computational capabilities required for cutting-edge AI research and development, potentially stifling innovation and widening the gap between well-funded institutions and those with fewer resources.

Power Consumption

One notable drawback of NVIDIA machine learning technology is its high power consumption. While the impressive performance of NVIDIA GPUs enables faster processing and training of machine learning models, it also results in increased energy usage. This heightened power consumption can translate to higher electricity bills for users and raise environmental concerns due to the greater carbon footprint associated with running energy-intensive hardware. Finding a balance between performance and energy efficiency is crucial in mitigating the impact of power consumption in NVIDIA machine learning applications.

Complexity

One significant drawback of utilising NVIDIA hardware for machine learning is the inherent complexity involved. Harnessing the power of NVIDIA GPUs necessitates a specific level of technical proficiency and a deep understanding of tools such as CUDA and SDKs. This steep learning curve can present a considerable challenge for beginners in the field, requiring them to invest significant time and effort to grasp the intricacies of these technologies. As a result, the complexity associated with leveraging NVIDIA hardware may act as a barrier for individuals looking to explore machine learning without prior experience or expertise in GPU programming.