Unleashing the Potential of a Convolutional Neural Network

The Power of Convolutional Neural Networks

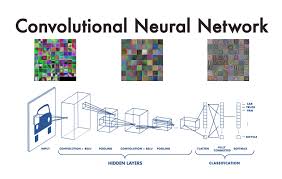

Convolutional Neural Networks (CNNs) have revolutionised the field of artificial intelligence and machine learning, particularly in the realm of computer vision. These deep neural networks have proven to be incredibly powerful in tasks such as image recognition, object detection, and image classification.

At the core of a Convolutional Neural Network are convolutional layers that apply filters to input data, allowing the network to automatically learn features from raw data. This process mimics the way the human visual system processes information, making CNNs highly effective in analysing visual data.

One of the key strengths of CNNs is their ability to capture spatial hierarchies in data. Through multiple layers of convolutions, pooling, and non-linear activation functions, CNNs can extract increasingly complex features from images. This hierarchical feature learning enables CNNs to identify patterns and objects within images with remarkable accuracy.

Moreover, CNNs are designed to be translation-invariant, meaning they can recognise objects regardless of their position or orientation within an image. This property makes them robust and reliable in real-world applications where objects may vary in size or location.

With their impressive performance in tasks such as image classification competitions and object recognition challenges, Convolutional Neural Networks have become indispensable tools in various fields, including healthcare (medical image analysis), autonomous vehicles (object detection), security (surveillance systems), and more.

As researchers continue to explore the capabilities of CNNs and improve their architectures through advancements like residual connections and attention mechanisms, we can expect even greater breakthroughs in computer vision and beyond. The power of Convolutional Neural Networks lies not only in their ability to understand images but also in their potential to transform how we interact with technology and perceive the world around us.

7 Essential Tips for Optimising Convolutional Neural Networks

- Start with a simple architecture before increasing complexity.

- Use pooling layers to reduce spatial dimensions and control overfitting.

- Experiment with different activation functions like ReLU or sigmoid.

- Regularize your model using techniques like dropout or L2 regularization.

- Consider using pre-trained models for transfer learning on similar tasks.

- Monitor training with metrics like accuracy and loss to track progress.

- Fine-tune hyperparameters such as learning rate and batch size for optimal performance.

Start with a simple architecture before increasing complexity.

When delving into the realm of Convolutional Neural Networks, it is advisable to begin with a simple architecture before gradually increasing complexity. By starting with a basic structure, you can better understand the fundamental principles of CNNs and how different components interact. This approach allows you to build a strong foundation and grasp the underlying concepts before tackling more intricate designs. Starting simple not only aids in comprehension but also enables you to troubleshoot and fine-tune your model effectively. As you gain confidence and expertise, you can then incrementally introduce more complex elements to enhance the performance of your Convolutional Neural Network.

Use pooling layers to reduce spatial dimensions and control overfitting.

Pooling layers play a crucial role in enhancing the performance of Convolutional Neural Networks by reducing spatial dimensions and mitigating overfitting. By aggregating information from neighbouring pixels and down-sampling feature maps, pooling layers help to extract the most relevant features while discarding redundant information. This process not only aids in controlling the model’s complexity but also improves generalisation by preventing the network from memorising noise in the training data. Overall, incorporating pooling layers in a CNN architecture is an effective strategy to streamline computation, enhance feature extraction, and promote better model generalisation.

Experiment with different activation functions like ReLU or sigmoid.

When working with a Convolutional Neural Network, it is beneficial to experiment with different activation functions such as ReLU or sigmoid. Activation functions play a crucial role in determining the network’s ability to learn complex patterns and make accurate predictions. ReLU (Rectified Linear Unit) is known for its simplicity and effectiveness in combating the vanishing gradient problem, while sigmoid function is commonly used in the output layer for binary classification tasks. By testing and comparing the performance of various activation functions, you can fine-tune your CNN model to achieve optimal results and enhance its overall efficiency in processing visual data.

Regularize your model using techniques like dropout or L2 regularization.

To enhance the performance and generalization of your Convolutional Neural Network, it is advisable to incorporate regularization techniques such as dropout or L2 regularization. By applying dropout, you can prevent overfitting by randomly deactivating certain neurons during training, which encourages the network to learn more robust and generalizable features. On the other hand, L2 regularization helps control the complexity of the model by penalizing large weights, thus promoting smoother decision boundaries and reducing the risk of overfitting. Implementing these regularization methods can significantly improve the overall efficiency and accuracy of your CNN model.

Consider using pre-trained models for transfer learning on similar tasks.

When working with Convolutional Neural Networks, it is advisable to consider utilising pre-trained models for transfer learning on related tasks. Pre-trained models have already been trained on large datasets for tasks such as image classification, and they have learned to extract valuable features from images. By leveraging these pre-trained models and fine-tuning them on your specific task or dataset, you can significantly reduce training time and resource requirements while achieving good performance. Transfer learning with pre-trained models can be particularly beneficial when working on similar tasks or domains, allowing you to build upon existing knowledge and adapt the model to your specific needs effectively.

Monitor training with metrics like accuracy and loss to track progress.

Monitoring training progress in a Convolutional Neural Network is crucial for ensuring optimal performance and efficient learning. By tracking metrics such as accuracy and loss throughout the training process, developers can gain valuable insights into how well the model is learning and where improvements may be needed. Accuracy provides a measure of how often the model predicts the correct output, while loss indicates how well the model is performing based on its defined objective. By regularly monitoring these metrics, developers can make informed decisions on adjusting parameters, optimising training strategies, and ultimately enhancing the overall effectiveness of the Convolutional Neural Network.

Fine-tune hyperparameters such as learning rate and batch size for optimal performance.

To maximise the performance of a Convolutional Neural Network, it is crucial to fine-tune hyperparameters such as the learning rate and batch size. The learning rate determines how quickly the model adapts to the training data, while the batch size affects the efficiency of gradient descent during training. By experimenting with different values for these hyperparameters and finding the optimal combination, one can enhance the network’s accuracy and speed of convergence, ultimately leading to superior performance in tasks such as image recognition and object detection.