Exploring Examples of Unsupervised Learning in Machine Learning

Examples of Unsupervised Learning

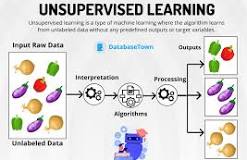

Unsupervised learning is a type of machine learning where the model learns patterns from unlabelled data without any guidance or supervision. This approach allows the algorithm to discover hidden structures and relationships within the data on its own. Here are some examples of unsupervised learning:

Clustering

Clustering is a common unsupervised learning technique that groups similar data points together based on their characteristics. One popular algorithm for clustering is K-means, which partitions the data into clusters by minimising the distance between data points and cluster centroids.

Anomaly Detection

Anomaly detection is another application of unsupervised learning, where the algorithm identifies outliers or anomalies in the data that do not conform to normal patterns. This can be useful for detecting fraudulent transactions, network intrusions, or other unusual events.

Dimensionality Reduction

Dimensionality reduction techniques like Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) are used in unsupervised learning to reduce the number of features in a dataset while preserving important information. This can help visualise high-dimensional data and improve model performance.

Association Rule Learning

Association rule learning is a method used to discover interesting relationships between variables in large datasets. One well-known algorithm in this category is Apriori, which identifies frequent itemsets in transactional data and generates association rules based on their co-occurrence.

In conclusion, unsupervised learning plays a crucial role in exploring unlabelled data and extracting meaningful insights without explicit guidance. By utilising algorithms like clustering, anomaly detection, dimensionality reduction, and association rule learning, machine learning models can uncover valuable patterns and relationships that may not be apparent through supervised techniques.

Exploring Unsupervised Learning: 9 Key Techniques and Their Applications

- Clustering is a key example of unsupervised learning, where data points are grouped based on similarity.

- Dimensionality reduction techniques like PCA help in reducing the number of variables while retaining important information.

- Anomaly detection uses unsupervised learning to identify unusual patterns that do not conform to expected behaviour.

- Association rule mining finds interesting relationships between variables in large databases, often used in market basket analysis.

- Self-organising maps (SOMs) provide a way to visualise high-dimensional data by mapping it onto a lower-dimensional grid.

- Hierarchical clustering creates a tree-like structure of nested clusters, useful for understanding the data’s natural grouping.

- Gaussian mixture models can be used to model normally distributed subpopulations within an overall population without labels.

- t-SNE is effective for visualising complex datasets by reducing dimensions while preserving local and global structures.

- Autoencoders learn efficient codings of input data and can be used for tasks like noise reduction or feature extraction.

Clustering is a key example of unsupervised learning, where data points are grouped based on similarity.

Clustering stands out as a fundamental example of unsupervised learning, showcasing the capability to group data points together based on their similarities. This technique allows the algorithm to identify patterns and relationships within the data without the need for labelled guidance, enabling insights to emerge organically from the inherent structure of the dataset.

Dimensionality reduction techniques like PCA help in reducing the number of variables while retaining important information.

Dimensionality reduction techniques such as Principal Component Analysis (PCA) are invaluable in the realm of unsupervised learning as they enable the reduction of the number of variables within a dataset while still preserving crucial information. By transforming high-dimensional data into a lower-dimensional space, PCA aids in simplifying complex datasets, enhancing visualisation capabilities, and improving the efficiency and accuracy of machine learning algorithms. This process not only streamlines data analysis but also facilitates a deeper understanding of underlying patterns and relationships within the data without compromising essential insights.

Anomaly detection uses unsupervised learning to identify unusual patterns that do not conform to expected behaviour.

Anomaly detection is a powerful application of unsupervised learning that leverages algorithms to identify irregular patterns within a dataset that deviate from the norm. By utilising unsupervised techniques, such as clustering and dimensionality reduction, anomaly detection can effectively pinpoint outliers or anomalies in the data that do not align with expected behaviour. This capability is particularly valuable in various fields, including cybersecurity, fraud detection, and predictive maintenance, where detecting unusual occurrences is crucial for maintaining system integrity and security.

Association rule mining finds interesting relationships between variables in large databases, often used in market basket analysis.

Association rule mining is a powerful technique in unsupervised learning that uncovers intriguing connections between variables within extensive databases. This method is frequently applied in market basket analysis, where it identifies patterns of co-occurring items in transactions to reveal valuable insights into consumer behaviour and preferences. By leveraging association rule mining, businesses can gain a deeper understanding of customer purchasing habits and make informed decisions to enhance their marketing strategies and product offerings.

Self-organising maps (SOMs) provide a way to visualise high-dimensional data by mapping it onto a lower-dimensional grid.

Self-organising maps (SOMs) offer a powerful technique for visualising complex, high-dimensional data in a more intuitive and understandable manner. By mapping the data onto a lower-dimensional grid, SOMs enable us to identify patterns and relationships that may not be immediately apparent in the original dataset. This approach helps to simplify the data representation and allows us to explore and interpret the underlying structures more effectively.

Hierarchical clustering creates a tree-like structure of nested clusters, useful for understanding the data’s natural grouping.

Hierarchical clustering is a powerful technique in unsupervised learning that constructs a tree-like structure of nested clusters, providing valuable insights into the inherent grouping patterns within the data. By visually representing the relationships between data points in a hierarchical manner, this method allows for a deeper understanding of how individual elements are related to larger clusters and subclusters. This approach is particularly useful for uncovering the natural hierarchy and organisation present in complex datasets, making it easier to interpret and analyse the underlying patterns within the data.

Gaussian mixture models can be used to model normally distributed subpopulations within an overall population without labels.

Gaussian mixture models offer a powerful tool in unsupervised learning by allowing us to identify normally distributed subpopulations within a larger dataset, even in the absence of labelled data. By leveraging the flexibility of these models, we can uncover hidden structures and patterns that may exist within the overall population. This approach enables us to gain insights into the underlying characteristics of different groups within the data, facilitating a deeper understanding of complex relationships and distributions that may not be apparent through other methods.

t-SNE is effective for visualising complex datasets by reducing dimensions while preserving local and global structures.

t-SNE, a powerful dimensionality reduction technique in unsupervised learning, stands out for its effectiveness in visualising intricate datasets. By reducing the dimensions of the data while retaining both local and global structures, t-SNE allows for a clearer representation of complex relationships within the dataset. This capability makes it a valuable tool for exploring high-dimensional data and gaining insights into patterns that may not be easily discernible through traditional methods.

Autoencoders learn efficient codings of input data and can be used for tasks like noise reduction or feature extraction.

Autoencoders are a powerful tool in unsupervised learning that excel at learning compact and efficient representations of input data. By training the model to reconstruct the input as closely as possible, autoencoders can capture the essential features and patterns present in the data. This makes them valuable for tasks such as noise reduction, where they can filter out unwanted disturbances, and feature extraction, where they can identify important characteristics for further analysis or processing. Autoencoders showcase the versatility and effectiveness of unsupervised learning techniques in uncovering hidden structures within datasets.