Unleashing Creativity: The Power of Generative Deep Learning

Exploring Generative Deep Learning

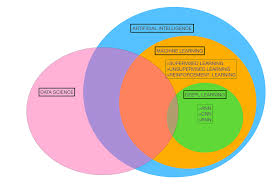

Generative deep learning is a fascinating field within artificial intelligence that focuses on creating models capable of generating new data. Unlike traditional machine learning algorithms that are designed for classification or regression tasks, generative models aim to understand and replicate complex patterns in the data they are trained on.

One of the most popular techniques used in generative deep learning is the use of Generative Adversarial Networks (GANs). GANs consist of two neural networks – a generator and a discriminator – that work together in a competitive manner. The generator generates new data samples, while the discriminator tries to distinguish between real and generated samples.

Through this adversarial process, GANs can learn to create realistic data samples that closely resemble the original training data. This has led to impressive applications in image generation, text generation, and even music composition.

Another approach to generative deep learning is Variational Autoencoders (VAEs). VAEs are probabilistic models that aim to learn the underlying distribution of the input data. By encoding input data into a lower-dimensional latent space and then decoding it back into its original form, VAEs can generate new data points with similar characteristics.

The potential applications of generative deep learning are vast and diverse. From generating realistic images for design purposes to creating synthetic data for training machine learning models, generative models offer exciting possibilities for innovation across various industries.

As researchers continue to explore and refine generative deep learning techniques, we can expect even more impressive advancements in artificial intelligence and creative expression. The journey of exploring generative deep learning is an exciting one, filled with endless opportunities for discovery and innovation.

9 Essential Tips for Mastering Generative Deep Learning

- Understand the basics of neural networks

- Learn about different types of generative deep learning models such as GANs and VAEs

- Experiment with various architectures and hyperparameters

- Preprocess your data carefully to ensure good model performance

- Regularize your models to prevent overfitting

- Use appropriate evaluation metrics for generative models such as Inception Score or Frechet Inception Distance (FID)

- Consider using transfer learning to leverage pre-trained models

- Stay up-to-date with the latest research in generative deep learning

- Practice, practice, practice – hands-on experience is key

Understand the basics of neural networks

Understanding the basics of neural networks is crucial when delving into generative deep learning. Neural networks serve as the fundamental building blocks of many generative models, enabling them to learn complex patterns and generate new data. By grasping the core concepts of neural networks, such as layers, activation functions, and backpropagation, individuals can gain a solid foundation for exploring and creating innovative generative deep learning applications.

Learn about different types of generative deep learning models such as GANs and VAEs

To delve into the realm of generative deep learning, it is essential to familiarise oneself with various types of models, including Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). GANs operate through a competitive interplay between a generator and a discriminator, enabling the creation of realistic data samples. On the other hand, VAEs utilise probabilistic models to understand and generate new data points by encoding them into a latent space. By exploring these distinct approaches, one can gain a comprehensive understanding of how generative deep learning models can replicate and create complex patterns in data.

Experiment with various architectures and hyperparameters

When delving into the realm of generative deep learning, it is essential to experiment with a range of architectures and hyperparameters to uncover the optimal settings for your specific task. By exploring different neural network structures, activation functions, layer configurations, and tuning hyperparameters such as learning rates and batch sizes, you can fine-tune your model’s performance and enhance its ability to generate high-quality outputs. This iterative process of experimentation not only helps in understanding the nuances of generative models but also paves the way for innovation and breakthroughs in the field of artificial intelligence.

Preprocess your data carefully to ensure good model performance

When delving into the realm of generative deep learning, it is crucial to pay meticulous attention to preprocessing your data. The quality and structure of the input data greatly influence the performance of your models. By carefully preparing and cleaning your data, you can enhance the accuracy and efficiency of your generative deep learning algorithms. Thoughtful preprocessing steps, such as handling missing values, normalising data, and removing outliers, can significantly impact the model’s ability to learn meaningful patterns and generate high-quality outputs. Investing time in thorough data preprocessing lays a solid foundation for successful outcomes in generative deep learning endeavours.

Regularize your models to prevent overfitting

Regularizing your models is a crucial tip in generative deep learning to prevent overfitting. Overfitting occurs when a model learns the training data too well, capturing noise and irrelevant patterns that do not generalize to unseen data. By applying regularization techniques such as L1 or L2 regularization, dropout, or early stopping, you can effectively control the complexity of your models and improve their ability to generalise to new data. Regularization helps strike a balance between fitting the training data well and avoiding overfitting, ultimately enhancing the performance and robustness of your generative deep learning models.

Use appropriate evaluation metrics for generative models such as Inception Score or Frechet Inception Distance (FID)

When working with generative deep learning models, it is crucial to use appropriate evaluation metrics to assess the quality and performance of the generated outputs. Metrics such as Inception Score or Frechet Inception Distance (FID) are commonly used in the field of generative models to measure the realism and diversity of generated samples. Inception Score evaluates the quality and diversity of generated images based on how well they can be classified by a pre-trained Inception model, while FID measures the similarity between the distribution of real and generated images. By utilising these evaluation metrics, researchers can gain valuable insights into the effectiveness of their generative models and make informed decisions to enhance their performance.

Consider using transfer learning to leverage pre-trained models

Transfer learning is a powerful technique in generative deep learning that allows practitioners to leverage pre-trained models to enhance the performance and efficiency of their own models. By utilising a model that has already been trained on a large dataset, one can significantly reduce the time and computational resources required for training. This approach is particularly useful when dealing with limited data or when aiming to achieve high accuracy with less effort. Pre-trained models have already captured a wealth of features and patterns, which can be fine-tuned to suit specific tasks, thereby accelerating the development process and improving overall results.

Stay up-to-date with the latest research in generative deep learning

Staying up-to-date with the latest research in generative deep learning is crucial for anyone looking to explore this cutting-edge field. The rapid pace of advancements in generative models, such as GANs and VAEs, means that new techniques and breakthroughs are constantly emerging. By keeping abreast of the latest research papers, developments, and trends in generative deep learning, enthusiasts and professionals can stay informed about the state-of-the-art methods and applications, ensuring they remain at the forefront of innovation and creativity within the realm of artificial intelligence.

Practice, practice, practice – hands-on experience is key

In the realm of generative deep learning, the mantra “practice, practice, practice” rings true as hands-on experience proves to be key in mastering the intricacies of this field. By actively engaging with generative models, experimenting with different architectures, and fine-tuning parameters through practical application, one can gain a deeper understanding of how these models work and how to harness their creative potential. Through consistent practice and exploration, individuals can sharpen their skills, uncover new insights, and push the boundaries of generative deep learning to unlock innovative solutions and possibilities.