Exploring the Potential of GNN in Machine Learning: A Comprehensive Overview

The Power of Graph Neural Networks in Machine Learning

Graph Neural Networks (GNNs) have emerged as a powerful tool in the field of machine learning, offering new ways to model and analyse complex relational data. Unlike traditional neural networks that operate on grid-like data structures, GNNs are designed to work with graph-structured data, such as social networks, citation networks, molecular structures, and more.

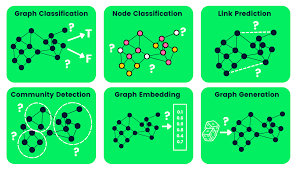

One of the key strengths of GNNs is their ability to capture the relationships and interactions between different entities in a graph. By incorporating information from neighbouring nodes, GNNs can learn rich representations that encode both local and global information. This makes them well-suited for tasks such as node classification, link prediction, and graph classification.

Another advantage of GNNs is their scalability and flexibility. They can be applied to graphs of varying sizes and structures, making them suitable for a wide range of applications across different domains. Researchers have successfully used GNNs in areas such as social network analysis, recommendation systems, bioinformatics, and more.

Furthermore, recent advancements in GNN research have led to the development of more sophisticated architectures and training algorithms. Techniques like Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), and GraphSAGE have pushed the boundaries of what is possible with GNNs, achieving state-of-the-art performance on various tasks.

In conclusion, Graph Neural Networks represent a promising direction in machine learning research. Their ability to model complex relationships in graph data opens up new possibilities for solving challenging problems across different domains. As researchers continue to explore and innovate with GNNs, we can expect even more exciting developments in the future.

Essential Tips for Mastering Graph Neural Networks (GNNs) in Machine Learning

- Understand the basics of Graph Neural Networks (GNNs) before diving into advanced concepts.

- Explore different GNN architectures such as Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs).

- Preprocess your graph data effectively to ensure optimal performance of your GNN model.

- Regularize your GNN model to prevent overfitting, especially in scenarios with limited training data.

- Experiment with hyperparameters tuning to fine-tune the performance of your GNN model for specific tasks.

- Stay updated with the latest research and developments in the field of Graph Neural Networks to leverage cutting-edge techniques.

Understand the basics of Graph Neural Networks (GNNs) before diving into advanced concepts.

It is essential to grasp the fundamentals of Graph Neural Networks (GNNs) before delving into more complex concepts. Understanding the basics of GNNs, such as how they operate on graph-structured data and capture relationships between nodes, lays a strong foundation for exploring advanced applications and techniques. By building a solid understanding of the core principles of GNNs, individuals can effectively leverage their capabilities and navigate the intricacies of this powerful machine learning tool with confidence and clarity.

Explore different GNN architectures such as Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs).

To delve deeper into the realm of Graph Neural Networks (GNNs) in machine learning, it is crucial to explore a variety of architectures, including cutting-edge models like Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs). These advanced architectures offer unique capabilities for capturing intricate relationships within graph-structured data, enabling more nuanced and accurate representations. By experimenting with different GNN models such as GCNs and GATs, researchers can uncover novel insights and push the boundaries of what is achievable in graph-based machine learning tasks.

Preprocess your graph data effectively to ensure optimal performance of your GNN model.

To maximise the performance of your Graph Neural Network (GNN) model, it is crucial to preprocess your graph data effectively. Proper preprocessing techniques, such as handling missing or noisy data, normalising features, and encoding nodes and edges appropriately, can significantly impact the accuracy and efficiency of your GNN. By ensuring that your graph data is clean, structured, and optimally prepared for training, you can enhance the overall effectiveness of your GNN model in capturing complex relationships within the graph and achieving superior results in various machine learning tasks.

Regularize your GNN model to prevent overfitting, especially in scenarios with limited training data.

When working with Graph Neural Networks (GNNs), it is crucial to regularize your model to prevent overfitting, especially in scenarios where training data is limited. Overfitting occurs when a model learns the noise in the training data rather than the underlying patterns, leading to poor generalization on unseen data. By applying regularization techniques such as dropout, weight decay, or early stopping, you can help your GNN model generalise better and improve its performance on new data. This becomes particularly important in situations where training data is scarce, as regularisation can help prevent the model from memorising noise and instead focus on learning meaningful patterns from the data.

Experiment with hyperparameters tuning to fine-tune the performance of your GNN model for specific tasks.

To enhance the performance of your Graph Neural Network (GNN) model for specific tasks, it is crucial to experiment with hyperparameters tuning. Fine-tuning the hyperparameters allows you to optimise the model’s architecture and settings to achieve better results. By adjusting parameters such as learning rate, number of layers, dropout rates, and more, you can tailor the GNN model to suit the requirements of your task, ultimately improving its accuracy and efficiency. Experimenting with hyperparameters tuning is a valuable practice in maximising the potential of GNNs and achieving optimal performance in various machine learning tasks.

Stay updated with the latest research and developments in the field of Graph Neural Networks to leverage cutting-edge techniques.

To harness the full potential of Graph Neural Networks, it is crucial to stay abreast of the latest research and advancements in the field. By keeping up-to-date with the evolving landscape of GNNs, one can leverage cutting-edge techniques and methodologies to enhance model performance and tackle complex problems more effectively. Continuous learning and exploration of new developments in Graph Neural Networks ensure that practitioners remain at the forefront of innovation, enabling them to push the boundaries of what is achievable in machine learning applications.