Exploring Neural Network Capabilities with PyTorch

Understanding Neural Networks with PyTorch

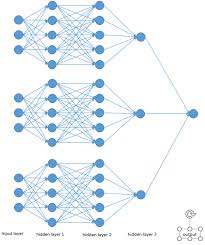

Neural networks have become a cornerstone of modern artificial intelligence, powering advancements in fields ranging from image recognition to natural language processing. Among the various tools available for building and training neural networks, PyTorch stands out as a popular choice for researchers and developers alike.

What is PyTorch?

PyTorch is an open-source machine learning library developed by Facebook’s AI Research lab (FAIR). It provides a flexible and efficient platform for building deep learning models, allowing users to create complex neural networks with ease. PyTorch’s dynamic computational graph and extensive support for GPU acceleration make it particularly well-suited for research and development.

Key Features of PyTorch

- Dynamic Computational Graph: Unlike static computational graphs used by some other frameworks, PyTorch employs dynamic graphs that are created on-the-fly. This allows for greater flexibility and ease of debugging.

- Eager Execution: With eager execution, operations are computed immediately as they are called. This makes the code more intuitive and easier to work with.

- Extensive Library Support: PyTorch integrates seamlessly with other libraries such as NumPy and SciPy, providing a robust ecosystem for scientific computing.

- GPU Acceleration: PyTorch supports CUDA, enabling significant speedups by leveraging GPU computation power.

- TorchScript: TorchScript allows you to transform your PyTorch models into production-ready formats that can be run independently from Python.

Building a Simple Neural Network with PyTorch

The following example demonstrates how to build a simple feedforward neural network using PyTorch:

// Import necessary libraries

import torch

import torch.nn as nn

import torch.optim as optim

# Define the neural network class

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.fc1 = nn.Linear(784, 128)

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 10)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

x = self.fc3(x)

return x

# Instantiate the model

model = SimpleNN()

# Define loss function and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

# Dummy input data (batch size of 1)

input_data = torch.randn(1, 784)

# Forward pass

output = model(input_data)

# Calculate loss (dummy target labels)

target_labels = torch.tensor([3])

loss = criterion(output, target_labels)

# Backward pass and optimization step

loss.backward()

optimizer.step()

print("Output:", output)

print("Loss:", loss.item())

This example illustrates the basic steps involved in defining a neural network architecture using the `nn.Module` class in PyTorch. The model consists of three fully connected layers (`fc1`, `fc2`, `fc3`) with ReLU activation functions applied between them. The network takes an input tensor of size 784 (e.g., flattened 28×28 pixel images) and outputs a tensor of size 10 (e.g., class probabilities).

The Future of Deep Learning with PyTorch

The flexibility and user-friendly nature of PyTorch have made it a favourite among researchers in academia and industry. As the field of deep learning continues to evolve rapidly, tools like PyTorch will play an essential role in driving innovation forward.

If you’re looking to dive into deep learning or enhance your current projects with state-of-the-art techniques, exploring what you can achieve with PyTorch is highly recommended. Its extensive documentation and vibrant community support make it an accessible yet powerful tool for anyone interested in artificial intelligence.

Top 6 Advantages of Using PyTorch for Neural Networks

- Flexible and dynamic computational graph

- Eager execution for intuitive coding

- Extensive library support for seamless integration

- Efficient GPU acceleration with CUDA support

- TorchScript for transforming models into production-ready formats

- Robust ecosystem and vibrant community for AI enthusiasts

Challenges in Using PyTorch Neural Networks: Key Drawbacks to Consider

- Steep Learning Curve

- Limited Production Support

- Higher Memory Usage

- Slower Execution Speed

- Less Comprehensive Documentation

- Community Size

Flexible and dynamic computational graph

One of the key advantages of using PyTorch for neural networks is its flexible and dynamic computational graph. Unlike frameworks with static graphs, PyTorch’s dynamic approach allows for on-the-fly creation and modification of computational graphs during runtime. This flexibility enables users to easily debug and experiment with their models, making it a preferred choice for researchers and developers seeking a more intuitive and adaptable deep learning framework.

Eager execution for intuitive coding

One of the key advantages of using PyTorch for neural network development is its implementation of eager execution, which allows operations to be computed immediately as they are called. This feature enhances the coding experience by providing a more intuitive workflow, where developers can dynamically interact with their models and inspect results in real-time. Eager execution in PyTorch simplifies the process of debugging and experimenting with neural networks, making it easier for users to understand and modify their code effectively.

Extensive library support for seamless integration

One notable advantage of PyTorch is its extensive library support, enabling seamless integration with other popular libraries such as NumPy and SciPy. This feature allows users to leverage a wide range of tools and resources for scientific computing, data manipulation, and visualisation within the PyTorch ecosystem. By providing a smooth integration experience, PyTorch empowers developers and researchers to combine the strengths of different libraries effectively, enhancing the flexibility and capabilities of their neural network projects.

Efficient GPU acceleration with CUDA support

PyTorch’s efficient GPU acceleration with CUDA support is a significant advantage for those working on computationally intensive tasks. By leveraging NVIDIA’s CUDA, PyTorch allows for seamless integration with GPU hardware, dramatically speeding up the training and inference processes of neural networks. This capability is particularly beneficial when dealing with large datasets and complex models, as it reduces the time required to achieve results. The ease of switching between CPU and GPU computation in PyTorch ensures that developers can optimise their workflows without extensive code modifications, making it an ideal choice for both research and production environments.

TorchScript for transforming models into production-ready formats

One significant advantage of PyTorch is its TorchScript feature, which allows users to transform their neural network models into production-ready formats. By leveraging TorchScript, developers can convert PyTorch models into a serialized representation that can be run independently from Python. This capability streamlines the deployment process, enabling seamless integration of PyTorch models into production environments without the need for a Python runtime. TorchScript enhances the efficiency and scalability of deploying neural network models built in PyTorch, making it a valuable tool for transitioning from research and development to real-world applications.

Robust ecosystem and vibrant community for AI enthusiasts

One of the standout advantages of using PyTorch for neural networks is its robust ecosystem and vibrant community, which greatly benefit AI enthusiasts. PyTorch boasts an extensive collection of libraries and tools that seamlessly integrate with other scientific computing resources, such as NumPy and SciPy, facilitating a comprehensive environment for developing sophisticated AI models. Furthermore, its active community of developers, researchers, and practitioners continuously contributes to its growth by sharing knowledge, providing support, and collaborating on innovative projects. This collaborative spirit not only accelerates learning and problem-solving but also ensures that PyTorch remains at the forefront of advancements in artificial intelligence.

Steep Learning Curve

One notable drawback of PyTorch is its steep learning curve, which can pose a significant challenge for beginners. The library’s complex syntax and advanced concepts may be daunting for those new to deep learning and neural networks. Understanding PyTorch’s intricate mechanisms and effectively implementing them in practice often requires a substantial investment of time and effort. As a result, novice users may find themselves grappling with the complexities of PyTorch as they strive to grasp its full potential in building sophisticated neural network models.

Limited Production Support

One notable drawback of PyTorch is its limited production support. Although PyTorch excels in research and prototyping due to its flexibility and ease of use, it may fall short when it comes to large-scale production deployment. Certain features and optimizations required for seamless integration into production environments, such as robust model serving capabilities and efficient scalability, may be lacking compared to other frameworks specifically designed for production-level deployment. This limitation can pose challenges for organisations looking to transition from experimental models to real-world applications efficiently and effectively.

Higher Memory Usage

One drawback of using PyTorch for neural network development is its tendency towards higher memory usage due to the dynamic nature of its graph computation. Unlike static graph frameworks, PyTorch’s dynamic computational graph creation on-the-fly can result in increased memory consumption during training and inference processes. This higher memory usage may pose challenges, especially when working with large datasets or complex models, requiring careful memory management strategies to optimize performance and prevent potential resource constraints.

Slower Execution Speed

One notable drawback of using PyTorch is its slower execution speed compared to other deep learning frameworks, such as TensorFlow, in certain scenarios. While PyTorch offers flexibility and ease of use, particularly with its dynamic computational graph approach, it may lag behind in terms of performance for specific types of computations. This limitation can impact tasks that require high-speed processing or real-time applications where faster execution times are crucial. Developers and researchers should consider this con when choosing a framework for their deep learning projects to ensure optimal performance based on their specific requirements.

Less Comprehensive Documentation

A notable drawback of PyTorch, as highlighted by some users, is the issue of less comprehensive documentation. While PyTorch is a powerful tool for building neural networks, some users have expressed concerns about the official documentation lacking in detail and organization. This can pose challenges for individuals, especially those new to the framework, seeking clear guidance and in-depth explanations on various functionalities and features. Improved documentation could enhance the overall user experience and accessibility of PyTorch for a wider range of developers and researchers.

Community Size

One notable drawback of using PyTorch for neural networks is its relatively smaller community size compared to other prominent deep learning libraries. This limited community size can lead to slower responses to queries or issues that users may encounter while working with PyTorch. As a result, finding timely solutions to problems or receiving support from the community may be more challenging, impacting the overall user experience and potentially causing delays in project development or troubleshooting processes.