Exploring the Power of Neuralnets in Modern Technology

Understanding Neural Networks: The Backbone of Modern AI

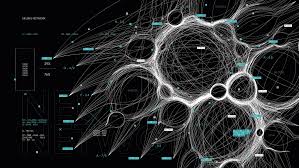

Neural networks are at the heart of many modern artificial intelligence applications, from voice recognition systems to self-driving cars. But what exactly are neural networks, and how do they work?

What Are Neural Networks?

A neural network is a series of algorithms that attempts to recognise underlying relationships in a set of data through a process that mimics the way the human brain operates. They are a key technology in machine learning and form the basis for deep learning models.

The concept of neural networks is inspired by the biological neural networks that constitute animal brains. A neural network consists of layers of nodes, or “neurons,” each connected to other nodes in adjacent layers. These connections have weights that adjust as learning proceeds, which helps the network make accurate predictions or classifications.

How Do Neural Networks Work?

The operation of a neural network can be divided into three main parts: input layer, hidden layers, and output layer.

- Input Layer: This is where the model receives its initial data. Each neuron in this layer represents an attribute or feature from the dataset.

- Hidden Layers: These layers perform various computations and transformations on input data through weighted connections. A network can have one or more hidden layers depending on its complexity.

- Output Layer: This layer produces the final result or prediction based on the processed information from previous layers.

The learning process involves adjusting weights through techniques like backpropagation and gradient descent to minimise error rates between predicted outputs and actual results.

Applications of Neural Networks

Neural networks are used across various industries due to their ability to model complex patterns and relationships within data. Some common applications include:

- Image Recognition: Convolutional Neural Networks (CNNs) excel at identifying objects within images, making them invaluable for facial recognition systems and medical image analysis.

- NLP (Natural Language Processing): Recurrent Neural Networks (RNNs) help in understanding human language by processing sequences of words for tasks like translation and sentiment analysis.

- Aerospace: Used for fault detection in aircraft systems by analysing sensor data patterns.

- Agriculture: Assisting in predicting crop yields based on environmental factors using historical data patterns.

The Future of Neural Networks

The future looks promising as researchers continue exploring ways to enhance neural networks’ efficiency and capabilities. Innovations such as quantum computing hold potential for even more powerful models capable of solving increasingly complex problems faster than ever before.

The ongoing development promises exciting advancements across fields ranging from healthcare diagnostics powered by AI-driven insights all way up into autonomous vehicles navigating our roads safely without human intervention!

The potential impact these technologies will have on society continues growing exponentially each day; it’s clear why understanding how they function becomes essential knowledge not just among tech enthusiasts but everyone interested shaping tomorrow’s world today!

Top 5 Tips for Optimising Neural Networks: From Architecture to Activation

- Understand the architecture of neural networks before implementing them.

- Regularly update and fine-tune the hyperparameters for better performance.

- Ensure your dataset is well-preprocessed to improve the neural network’s learning process.

- Regularly monitor and analyse the training process to identify any issues or overfitting.

- Experiment with different activation functions and optimisation algorithms to find the best combination for your task.

Understand the architecture of neural networks before implementing them.

Before diving into the implementation of neural networks, it’s crucial to understand their architecture thoroughly. The architecture of a neural network determines how effectively it can model complex patterns and relationships within data. It includes the number of layers and nodes, the type of layers used (such as convolutional or recurrent), and how these layers are interconnected. Each architectural choice impacts the network’s ability to learn from data, its computational efficiency, and ultimately its performance in real-world applications. By gaining a solid understanding of these components, one can design more effective networks tailored to specific tasks, optimise learning processes, and avoid common pitfalls that may arise from poorly structured models.

Regularly update and fine-tune the hyperparameters for better performance.

Regularly updating and fine-tuning the hyperparameters of neural networks is crucial for achieving optimal performance. Hyperparameters, such as learning rate, batch size, and network architecture, significantly impact the model’s ability to learn and make accurate predictions. By experimenting with different hyperparameter values and continuously refining them based on the model’s performance, developers can enhance the neural network’s efficiency and effectiveness in handling complex tasks. This iterative process of adjusting hyperparameters ensures that the neural network adapts to changing data patterns and maximises its predictive capabilities.

Ensure your dataset is well-preprocessed to improve the neural network’s learning process.

Ensuring that your dataset is well-preprocessed is crucial for enhancing the learning process of neural networks. Proper preprocessing, such as data cleaning, normalisation, and feature scaling, can significantly improve the network’s ability to extract meaningful patterns and make accurate predictions. By preparing the data effectively before feeding it into the neural network, you can help reduce noise, handle missing values, and ensure that the model learns more efficiently. This attention to data quality and preprocessing steps ultimately contributes to the overall performance and effectiveness of the neural network in solving complex tasks.

Regularly monitor and analyse the training process to identify any issues or overfitting.

Regularly monitoring and analysing the training process of neural networks is crucial to ensure optimal performance and accuracy. By keeping a close eye on the training data, model outputs, and performance metrics, one can identify any potential issues such as overfitting, where the model performs well on training data but poorly on unseen data. Detecting and addressing these issues promptly can help improve the overall robustness and generalisation capabilities of the neural network, leading to more reliable and effective results in real-world applications.

Experiment with different activation functions and optimisation algorithms to find the best combination for your task.

When working with neural networks, it is crucial to experiment with various activation functions and optimisation algorithms to determine the most effective combination for your specific task. Activation functions play a vital role in introducing non-linearity to the network, while optimisation algorithms help in adjusting the model’s parameters during training. By exploring different options and fine-tuning these components, you can enhance the performance and efficiency of your neural network, ultimately leading to more accurate results and better outcomes for your project.