Unlocking the Power of NVIDIA’s Neural Network Technology

NVIDIA Neural Networks: Pioneering the Future of AI

Introduction

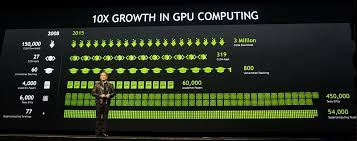

In recent years, NVIDIA has emerged as a leader in the field of artificial intelligence and machine learning, particularly through its advancements in neural networks. Known primarily for its graphics processing units (GPUs), NVIDIA has leveraged this technology to accelerate the development and deployment of neural networks across various industries.

The Role of GPUs in Neural Networks

Neural networks require significant computational power to process large datasets and perform complex calculations. NVIDIA’s GPUs are uniquely suited for this task due to their ability to handle parallel processing efficiently. This capability allows for faster training and inference times, making them ideal for deep learning applications.

By using GPUs, researchers and developers can train larger models more quickly, leading to more accurate predictions and insights. This has been instrumental in advancing fields such as natural language processing, computer vision, and autonomous systems.

NVIDIA’s Contributions to AI Research

NVIDIA has not only provided hardware solutions but also contributed significantly to AI research through software frameworks like CUDA and cuDNN. These tools have become essential for optimising neural network performance on NVIDIA hardware.

Furthermore, NVIDIA’s research division continues to push the boundaries of what is possible with neural networks. Their work includes developing new architectures that improve efficiency and accuracy while reducing computational requirements.

Applications Across Industries

The impact of NVIDIA’s neural network technology is evident across numerous industries:

- Healthcare: From medical imaging analysis to drug discovery, neural networks powered by NVIDIA GPUs are transforming healthcare delivery and outcomes.

- Automotive: Autonomous vehicles rely heavily on deep learning models trained on vast amounts of data using NVIDIA’s technology.

- Finance: Financial institutions use neural networks for risk assessment, fraud detection, and algorithmic trading.

- Entertainment: In gaming and film production, AI-driven graphics rendering is enhancing visual experiences like never before.

The Future of Neural Networks with NVIDIA

NVIDIA continues to innovate by developing cutting-edge technologies such as Tensor Cores designed specifically for AI workloads. The introduction of platforms like the DGX systems provides researchers with unparalleled computing power tailored for deep learning tasks.

The company’s commitment to open-source initiatives ensures that advancements in AI are accessible to a broader audience, fostering collaboration across the global research community. As a result, NVIDIA remains at the forefront of driving progress in artificial intelligence through its dedication to excellence in both hardware and software solutions.

Maximising Neural Network Performance: 8 Essential Tips for Using Nvidia’s Tools and Technologies

- Understand the basics of neural networks before diving into Nvidia’s implementations.

- Explore Nvidia’s GPU-accelerated deep learning frameworks like TensorFlow or PyTorch for efficient training.

- Utilize Nvidia’s CUDA platform for parallel computing to leverage the power of GPUs in neural network processing.

- Stay updated with Nvidia’s latest advancements in hardware and software for neural network applications.

- Consider using Nvidia’s pre-trained models and libraries to jumpstart your projects and experiments.

- Optimize your neural network models for Nvidia GPUs to achieve faster inference speeds and better performance.

- Join Nvidia’s developer community forums and events to learn from experts and collaborate with other enthusiasts.

- Experiment with different configurations and hyperparameters on Nvidia hardware to find the best setup for your neural network tasks.

Understand the basics of neural networks before diving into Nvidia’s implementations.

Before delving into NVIDIA’s implementations of neural networks, it is crucial to grasp the fundamental concepts of neural networks themselves. Understanding the basics, such as how neurons and layers function, the significance of activation functions, and the principles of training through backpropagation, provides a solid foundation. This knowledge enables one to appreciate how NVIDIA’s advanced technologies optimise these processes. By familiarising oneself with core concepts first, one can better comprehend how NVIDIA’s GPUs and software frameworks enhance neural network performance, ultimately leading to more effective utilisation of their powerful tools in practical applications.

Explore Nvidia’s GPU-accelerated deep learning frameworks like TensorFlow or PyTorch for efficient training.

To maximise the efficiency of training neural networks, it is highly recommended to explore NVIDIA’s GPU-accelerated deep learning frameworks such as TensorFlow or PyTorch. By leveraging the immense computational power of NVIDIA GPUs, these frameworks enable faster training times and improved performance for complex deep learning models. Embracing these technologies can significantly enhance the training process, allowing researchers and developers to achieve more accurate results in a shorter timeframe.

Utilize Nvidia’s CUDA platform for parallel computing to leverage the power of GPUs in neural network processing.

Utilising Nvidia’s CUDA platform for parallel computing is a strategic approach to harnessing the immense power of GPUs in neural network processing. CUDA, a parallel computing architecture developed by Nvidia, allows developers to significantly accelerate computational tasks by tapping into the GPU’s capability to handle multiple operations simultaneously. This is particularly advantageous for neural networks, which often require extensive data processing and complex calculations. By leveraging CUDA, developers can achieve faster training times and more efficient inference, enabling the development of more sophisticated AI models. This approach not only enhances performance but also optimises resource utilisation, making it an essential tool for anyone looking to push the boundaries of what’s possible with neural networks.

Stay updated with Nvidia’s latest advancements in hardware and software for neural network applications.

Staying updated with NVIDIA’s latest advancements in hardware and software for neural network applications is crucial for anyone involved in the field of artificial intelligence. NVIDIA consistently pushes the boundaries of innovation, offering cutting-edge solutions that enhance the performance and efficiency of neural networks. By keeping abreast of their developments, such as new GPU architectures or optimised software frameworks like CUDA and cuDNN, researchers and developers can leverage these tools to achieve faster processing times and more accurate results. This not only aids in staying competitive but also opens up new possibilities for breakthroughs in various AI-driven industries, from healthcare to autonomous vehicles.

Consider using Nvidia’s pre-trained models and libraries to jumpstart your projects and experiments.

When embarking on projects and experiments involving neural networks, leveraging NVIDIA’s pre-trained models and libraries can significantly accelerate development. These resources, often fine-tuned on extensive datasets, offer a robust starting point for a wide range of applications, from image recognition to natural language processing. By utilising these pre-trained models, developers can bypass the time-consuming initial training phase and focus on customising and refining models to suit specific needs. Additionally, NVIDIA’s comprehensive libraries provide optimised tools for deploying these models efficiently, ensuring that both performance and accuracy are maximised. This approach not only saves valuable time but also allows developers to harness state-of-the-art AI capabilities with reduced effort.

Optimize your neural network models for Nvidia GPUs to achieve faster inference speeds and better performance.

Optimising neural network models for NVIDIA GPUs is crucial for achieving faster inference speeds and enhanced performance. NVIDIA’s GPUs are designed to handle parallel processing efficiently, which is essential for the complex computations involved in neural networks. By tailoring models to leverage the architecture of NVIDIA GPUs, developers can significantly reduce training times and improve the accuracy of their predictions. This optimisation involves using frameworks like CUDA and libraries such as cuDNN, which are specifically designed to maximise GPU performance. As a result, optimised models not only run faster but also utilise resources more effectively, making them ideal for real-time applications across various industries.

Join Nvidia’s developer community forums and events to learn from experts and collaborate with other enthusiasts.

Joining NVIDIA’s developer community forums and participating in their events can be an invaluable resource for anyone interested in neural networks and AI technology. These platforms offer the opportunity to learn directly from industry experts who are at the forefront of AI advancements. Engaging with this community allows individuals to share insights, seek advice, and collaborate on projects with like-minded enthusiasts from around the world. Whether you’re troubleshooting a technical challenge or exploring new applications of NVIDIA’s technologies, these forums and events provide a supportive environment for growth and innovation. By connecting with others in the field, you can stay updated on the latest trends and developments, ensuring that you remain at the cutting edge of neural network research and application.

Experiment with different configurations and hyperparameters on Nvidia hardware to find the best setup for your neural network tasks.

When working with neural networks on NVIDIA hardware, it is crucial to experiment with various configurations and hyperparameters to optimise performance for specific tasks. NVIDIA’s GPUs offer robust computational capabilities that allow for extensive experimentation without prohibitive time constraints. By adjusting parameters such as learning rate, batch size, and network architecture, one can observe how these changes impact the model’s accuracy and efficiency. This iterative process is essential in fine-tuning neural networks to achieve optimal results. Leveraging NVIDIA’s advanced tools and frameworks can further aid in automating and streamlining these experiments, ultimately leading to a more effective setup tailored to the unique requirements of your neural network applications.