Exploring Unsupervised Learning Techniques in Machine Learning

Unsupervised Learning in Machine Learning

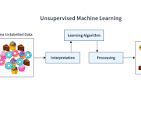

Unsupervised learning is a type of machine learning where the model is trained on unlabelled data without any guidance or supervision. Unlike supervised learning, where the algorithm is provided with labelled data to learn from, unsupervised learning algorithms must find patterns and relationships in the data on their own.

One of the main goals of unsupervised learning is to discover the underlying structure or distribution within the data. This can be achieved through techniques such as clustering, dimensionality reduction, and anomaly detection.

Clustering

Clustering is a common unsupervised learning technique that groups similar data points together based on their characteristics. The algorithm identifies clusters or groups in the data without any prior knowledge of what these clusters might be. K-means clustering and hierarchical clustering are popular algorithms used for this purpose.

Dimensionality Reduction

In dimensionality reduction, the goal is to reduce the number of features or variables in a dataset while preserving its important information. This helps in simplifying the dataset and improving computational efficiency. Principal Component Analysis (PCA) and t-distributed Stochastic Neighbor Embedding (t-SNE) are commonly used techniques for dimensionality reduction.

Anomaly Detection

Anomaly detection involves identifying unusual patterns or outliers in a dataset that do not conform to expected behaviour. Unsupervised learning algorithms can detect anomalies by comparing data points to normal patterns within the dataset. This is particularly useful in fraud detection, cybersecurity, and predictive maintenance applications.

Unsupervised learning plays a crucial role in various machine learning applications where labelled data may be scarce or expensive to obtain. By allowing algorithms to learn from unlabelled data and discover hidden patterns, unsupervised learning opens up new possibilities for extracting valuable insights from complex datasets.

Top 6 Tips for Effective Unsupervised Learning in Machine Learning

- Choose the right number of clusters for clustering algorithms.

- Preprocess data to handle missing values and scale features appropriately.

- Evaluate clustering results using metrics like silhouette score or Davies-Bouldin index.

- Consider dimensionality reduction techniques like PCA before applying clustering algorithms.

- Understand the assumptions of different clustering algorithms before selecting one for your dataset.

- Explore various clustering algorithms such as K-means, DBSCAN, and hierarchical clustering to find the best fit.

Choose the right number of clusters for clustering algorithms.

When working with clustering algorithms in unsupervised learning, it is essential to choose the right number of clusters for accurate and meaningful results. Selecting the optimal number of clusters can significantly impact the quality of the clustering output. Too few clusters may oversimplify the data, while too many clusters can lead to overfitting and obscure the underlying patterns. Various methods, such as the elbow method, silhouette score, or gap statistic, can help determine the appropriate number of clusters based on the data’s characteristics and structure. By carefully selecting the number of clusters, machine learning practitioners can ensure that clustering algorithms effectively capture the inherent relationships within the data for insightful analysis and decision-making.

Preprocess data to handle missing values and scale features appropriately.

In the realm of unsupervised learning in machine learning, a crucial tip is to preprocess data effectively by addressing missing values and scaling features appropriately. Handling missing values ensures that the dataset is clean and ready for analysis, preventing any biases or inaccuracies in the model’s learning process. Scaling features helps to standardise the range of values across different attributes, ensuring that each feature contributes equally to the model’s performance. By following this tip, practitioners can enhance the quality of their unsupervised learning models and derive more accurate insights from their data.

Evaluate clustering results using metrics like silhouette score or Davies-Bouldin index.

When working with unsupervised learning algorithms such as clustering, it is essential to evaluate the quality of the clustering results using metrics like silhouette score or Davies-Bouldin index. These metrics provide quantitative measures to assess how well the data points are clustered and how distinct the clusters are from each other. The silhouette score calculates the cohesion and separation of clusters, with values closer to 1 indicating well-defined clusters. On the other hand, the Davies-Bouldin index measures the average similarity between each cluster and its most similar cluster, where lower values indicate better clustering. By utilising these evaluation metrics, machine learning practitioners can gain insights into the effectiveness of their clustering algorithms and make informed decisions about improving model performance.

Consider dimensionality reduction techniques like PCA before applying clustering algorithms.

When delving into unsupervised learning in machine learning, it is advisable to consider dimensionality reduction techniques such as Principal Component Analysis (PCA) before applying clustering algorithms. PCA can help in simplifying the dataset by reducing the number of features while preserving important information, making it easier for clustering algorithms to identify meaningful patterns and clusters within the data. By reducing the dimensionality of the dataset first, you can enhance the efficiency and effectiveness of clustering algorithms in uncovering hidden structures and relationships within the data.

Understand the assumptions of different clustering algorithms before selecting one for your dataset.

When delving into unsupervised learning in machine learning, it is essential to grasp the underlying assumptions of various clustering algorithms before deciding on one for your dataset. Each clustering algorithm operates based on specific assumptions about the structure and distribution of the data, influencing its effectiveness in uncovering meaningful patterns. By understanding these assumptions, you can make an informed choice that aligns with the characteristics of your dataset, ultimately leading to more accurate and valuable insights from the clustering process.

Explore various clustering algorithms such as K-means, DBSCAN, and hierarchical clustering to find the best fit.

To enhance your understanding of unsupervised learning in machine learning, it is recommended to explore a range of clustering algorithms, including K-means, DBSCAN, and hierarchical clustering. Each algorithm has its strengths and weaknesses, and by experimenting with different approaches, you can identify the most suitable method for your specific dataset and objectives. By comparing the outcomes of these clustering algorithms, you can gain valuable insights into the structure and patterns within your data, ultimately helping you make more informed decisions and derive meaningful conclusions from unlabelled datasets.