Creating a Basic Neural Network Using Keras

The Power of Keras: Building a Simple Neural Network

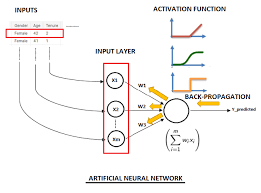

Neural networks have revolutionized the field of artificial intelligence, enabling machines to learn and make decisions in a way that mimics the human brain. Keras, a high-level neural networks API written in Python, has made it easier than ever to build and train neural networks.

Building a Simple Neural Network with Keras

Let’s dive into building a simple neural network using Keras. We’ll start by importing the necessary libraries:

<import keras

from keras.models import Sequential

from keras.layers import Dense>

Next, we’ll define our neural network model:

<model = Sequential()

model.add(Dense(units=64, activation='relu', input_dim=100)

model.add(Dense(units=10, activation='softmax')>

In this example, we have created a neural network with two layers: a hidden layer with 64 units and a ReLU activation function, and an output layer with 10 units and a softmax activation function.

Compiling and Training the Model

Now that we have defined our model, we need to compile it and train it on our data:

<model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=10, batch_size=32)>

Here, we are compiling the model with categorical crossentropy loss function and Adam optimizer. We then fit the model to our training data for 10 epochs with a batch size of 32.

Testing and Evaluating the Model

Once our model is trained, we can evaluate its performance on unseen data:

<loss, accuracy = model.evaluate(X_test, y_test)>We can now use this model to make predictions on new data and leverage its power for various applications.

Conclusion

Keras simplifies the process of building neural networks and allows us to focus on designing powerful models without getting bogged down in implementation details. By leveraging Keras’s capabilities, we can unlock the potential of neural networks for solving complex problems across various domains.

Frequently Asked Questions About Building Simple Neural Networks with Keras

- What is Keras?

- How do I build a simple neural network using Keras?

- What are the key components of a simple neural network in Keras?

- How do I define the layers in a simple neural network using Keras?

- What activation functions can be used in a simple neural network with Keras?

- How do I compile and train a simple neural network model in Keras?

- How can I evaluate the performance of a trained neural network model in Keras?

- What are some common issues or challenges when working with simple neural networks in Keras?

What is Keras?

Keras is a high-level neural networks API written in Python that has gained popularity for its user-friendly interface and powerful capabilities in building and training neural networks. It simplifies the process of creating complex neural network architectures by providing a simple and intuitive way to define and compile models. Keras abstracts away the complexities of low-level implementation details, allowing users to focus on designing and experimenting with different network structures. With Keras, developers can easily prototype, test, and deploy neural network models for various applications, making it a valuable tool for both beginners and experienced practitioners in the field of artificial intelligence.

How do I build a simple neural network using Keras?

Building a simple neural network using Keras is a common query among those exploring the realm of artificial intelligence and machine learning. To construct a basic neural network with Keras, one typically starts by importing the necessary libraries, defining the model architecture with layers and activation functions, compiling the model with appropriate loss functions and optimizers, training it on labelled data, and evaluating its performance. Keras simplifies this process by providing a high-level API that abstracts away much of the complexity involved in building neural networks, making it accessible even to beginners in the field. By following step-by-step tutorials or seeking guidance from online resources, individuals can easily grasp the fundamentals of creating neural networks using Keras and begin their journey into the world of deep learning.

What are the key components of a simple neural network in Keras?

When exploring the construction of a simple neural network in Keras, it is essential to understand its key components. In Keras, a basic neural network typically consists of layers that include input, hidden, and output layers. The input layer serves as the entry point for data, while the hidden layers process this data through various activation functions and weights. The output layer produces the final results or predictions based on the processed information. Additionally, defining the model architecture, compiling it with appropriate loss functions and optimizers, and training it using suitable datasets are crucial steps in building an effective neural network in Keras. Understanding these fundamental components is vital for creating robust and efficient neural networks for diverse applications.

How do I define the layers in a simple neural network using Keras?

When defining the layers in a simple neural network using Keras, it is essential to follow a structured approach to ensure the model’s effectiveness. In Keras, you can define the layers sequentially by creating a Sequential model and adding layers one by one. Each layer can be added using the `add()` method, specifying parameters such as the number of units, activation function, and input dimensions where necessary. By sequentially stacking layers in Keras, you can easily construct a simple neural network architecture that suits your specific requirements and optimises the model’s performance for various machine learning tasks.

What activation functions can be used in a simple neural network with Keras?

In a simple neural network built with Keras, there are several activation functions that can be used to introduce non-linearity and enable the network to learn complex patterns. Some commonly used activation functions include ReLU (Rectified Linear Activation), sigmoid, tanh (Hyperbolic Tangent), and softmax. ReLU is often preferred in hidden layers due to its simplicity and effectiveness in combating the vanishing gradient problem. Sigmoid and tanh functions are commonly used in binary classification tasks, while softmax is typically applied in the output layer for multi-class classification problems. Choosing the right activation function is crucial in determining the performance and learning capabilities of a neural network, and experimenting with different functions can help optimise model performance for specific tasks.

How do I compile and train a simple neural network model in Keras?

When it comes to compiling and training a simple neural network model in Keras, the process involves a few key steps. Firstly, after defining the model architecture by specifying the layers and activation functions, you need to compile the model using appropriate loss functions and optimizers. This step sets the stage for training the model on your dataset. Subsequently, you can train the model by fitting it to your training data over a specified number of epochs and with a defined batch size. By iteratively adjusting the model’s parameters based on the training data, Keras enables you to efficiently train your neural network model and evaluate its performance for various tasks.

How can I evaluate the performance of a trained neural network model in Keras?

To evaluate the performance of a trained neural network model in Keras, you can use the `evaluate` method provided by the Keras API. By calling this method on your trained model and passing in your test data, you can obtain metrics such as loss and accuracy that indicate how well your model performs on unseen data. This evaluation step is crucial in assessing the effectiveness of your neural network model and gaining insights into its predictive capabilities. By analysing these metrics, you can make informed decisions about fine-tuning your model or applying it to real-world scenarios with confidence.

What are some common issues or challenges when working with simple neural networks in Keras?

When working with simple neural networks in Keras, there are several common issues or challenges that practitioners may encounter. One common challenge is overfitting, where the model performs well on the training data but fails to generalise to unseen data. This can be addressed by using techniques such as dropout and early stopping. Another issue is selecting the appropriate architecture and hyperparameters for the neural network, which requires careful experimentation and tuning to achieve optimal performance. Additionally, handling imbalanced datasets, dealing with vanishing or exploding gradients during training, and understanding the interpretability of the model are also key challenges faced when working with simple neural networks in Keras. By being aware of these challenges and employing best practices, practitioners can effectively navigate these hurdles to build robust and reliable neural network models.