Exploring Neural Networks and Deep Learning: Insights from Michael Nielsen

Neural Networks and Deep Learning by Michael Nielsen

Michael Nielsen’s “Neural Networks and Deep Learning” is a seminal work that has significantly contributed to the understanding and advancement of artificial intelligence. This comprehensive book delves into the intricacies of neural networks, offering readers a detailed exploration of how these computational models mimic the human brain to process data and make decisions.

An Introduction to Neural Networks

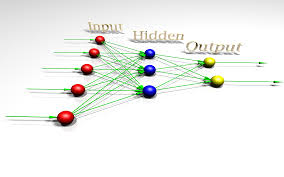

Neural networks are a subset of machine learning models inspired by the biological neural networks that constitute animal brains. They consist of interconnected layers of nodes, or “neurons,” each performing simple computations. These neurons are organised into three main types of layers: input layers, hidden layers, and output layers.

The power of neural networks lies in their ability to learn from data. Through a process called training, these networks adjust their weights based on the errors in their predictions, gradually improving their performance on tasks such as image recognition, natural language processing, and even playing complex games.

The Essence of Deep Learning

Deep learning is an advanced form of neural networks characterised by multiple hidden layers between the input and output layers. These deep architectures enable models to learn intricate patterns and representations from vast amounts of data.

Michael Nielsen’s book explains how deep learning algorithms can automatically discover features in raw data without manual intervention. This capability has revolutionised various fields, including computer vision, speech recognition, and autonomous driving.

Key Concepts Covered in the Book

- Gradient Descent: A fundamental optimisation technique used to minimise the error function in neural network training.

- Backpropagation: An algorithm for efficiently computing gradients needed for updating weights during training.

- Regularisation: Techniques such as dropout that prevent overfitting by penalising complex models.

- Convolutional Neural Networks (CNNs): Specialised architectures designed for processing grid-like data such as images.

- Recurrent Neural Networks (RNNs): Models tailored for sequential data analysis like time series or text.

The Impact on AI Research and Applications

“Neural Networks and Deep Learning” has become an essential resource for both newcomers and seasoned practitioners in AI. Its clear explanations and practical examples make it easier to grasp complex concepts while providing a solid foundation for further exploration.

Nielsen’s work has also inspired numerous researchers to push the boundaries of what is possible with AI. From healthcare diagnostics to financial forecasting, deep learning applications continue to expand rapidly across various industries.

A Resource for Lifelong Learning

The book is not just a technical manual; it also encourages readers to think critically about the ethical implications of AI technologies. As we integrate these powerful tools into our daily lives, it is crucial to consider issues such as privacy, bias, and accountability.

“Neural Networks and Deep Learning” remains a vital resource for anyone interested in understanding the transformative potential of artificial intelligence. By demystifying complex theories and providing practical insights, Michael Nielsen empowers readers to embark on their own journeys into the fascinating world of neural networks and deep learning.

Exploring Neural Networks and Deep Learning: Key Insights from Michael Nielsen’s Work

- What is the book ‘Neural Networks and Deep Learning’ by Michael Nielsen about?

- How do neural networks mimic the human brain?

- What are the key concepts covered in Michael Nielsen’s book on deep learning?

- How does training work in neural networks?

- What is the difference between shallow and deep neural networks?

- Can you explain backpropagation and its role in deep learning?

- What are some common applications of deep learning in real-world scenarios?

- How has Michael Nielsen’s work influenced the field of artificial intelligence research?

- Are there ethical considerations associated with the use of neural networks and deep learning?

What is the book ‘Neural Networks and Deep Learning’ by Michael Nielsen about?

The book “Neural Networks and Deep Learning” by Michael Nielsen is an insightful guide that delves into the fundamental concepts and advanced techniques of artificial intelligence. It provides a comprehensive exploration of neural networks, detailing how these computational models are inspired by the human brain to process information and make decisions. The book covers essential topics such as gradient descent, backpropagation, regularisation techniques, convolutional neural networks (CNNs), and recurrent neural networks (RNNs). Through clear explanations and practical examples, Nielsen elucidates how deep learning algorithms can automatically discover patterns in data, revolutionising fields like computer vision, speech recognition, and more. This work serves as both an educational resource for newcomers and a valuable reference for experienced practitioners in the AI community.

How do neural networks mimic the human brain?

Neural networks mimic the human brain by leveraging interconnected layers of artificial neurons that process information in a way reminiscent of biological neural networks. Just as neurons in the brain communicate through electrical signals, artificial neurons transmit signals based on weighted inputs and activation functions. This parallel structure allows neural networks to learn and adapt through training, adjusting their connections to improve performance on tasks. By simulating the complex interconnectedness and adaptive capabilities of the human brain, neural networks demonstrate a remarkable capacity to recognise patterns, make decisions, and solve problems in a manner that mirrors certain aspects of human cognition.

What are the key concepts covered in Michael Nielsen’s book on deep learning?

In Michael Nielsen’s book on deep learning, several key concepts are covered to provide readers with a comprehensive understanding of neural networks and their applications. Some of the fundamental topics explored include gradient descent, which is a crucial optimisation technique used in training neural networks, backpropagation for efficient computation of gradients, regularisation methods like dropout to prevent overfitting, convolutional neural networks (CNNs) tailored for image processing, and recurrent neural networks (RNNs) designed for sequential data analysis. These concepts serve as building blocks for readers to grasp the complexities of deep learning and its transformative impact on artificial intelligence research and applications.

How does training work in neural networks?

Understanding how training works in neural networks is crucial for grasping their functionality. During training, a neural network learns to make accurate predictions by adjusting its weights based on the errors it makes. This process involves feeding the network with input data and comparing its output to the desired output, known as the ground truth. By iteratively adjusting the weights through techniques like backpropagation and gradient descent, the network minimises its prediction errors and improves its performance on tasks such as image recognition or natural language processing. Michael Nielsen’s insights on training in neural networks provide a comprehensive understanding of this fundamental aspect of deep learning, shedding light on how these complex models learn from data to make informed decisions.

What is the difference between shallow and deep neural networks?

One frequently asked question in the realm of neural networks and deep learning, as elucidated by Michael Nielsen, pertains to the distinction between shallow and deep neural networks. Shallow neural networks consist of only a few layers between the input and output layers, limiting their capacity to learn complex patterns from data. In contrast, deep neural networks comprise multiple hidden layers that enable them to extract intricate features and representations, making them more adept at handling large datasets and solving intricate problems. The depth of a neural network allows it to capture hierarchical relationships within the data, leading to improved performance in tasks such as image recognition, speech processing, and natural language understanding. Understanding this disparity is pivotal in harnessing the full potential of neural networks for various applications in artificial intelligence.

Can you explain backpropagation and its role in deep learning?

One frequently asked question regarding neural networks and deep learning, as addressed by Michael Nielsen, is about backpropagation and its significance in the realm of deep learning. Backpropagation is a crucial algorithm used in training neural networks, enabling them to learn from data by adjusting the weights of connections between neurons. This iterative process involves propagating error information backwards through the network, allowing for efficient computation of gradients and subsequent updates to the network’s parameters. In deep learning, backpropagation plays a pivotal role in optimising model performance by minimising prediction errors and enhancing the network’s ability to extract complex patterns from data. Nielsen’s insights into backpropagation shed light on its fundamental importance in training deep neural networks and unlocking their potential for solving intricate real-world problems.

What are some common applications of deep learning in real-world scenarios?

One frequently asked question regarding neural networks and deep learning, as addressed by Michael Nielsen, revolves around the common applications of deep learning in real-world scenarios. Deep learning has found widespread use across various industries, including computer vision for facial recognition systems, natural language processing for virtual assistants like Siri and Alexa, autonomous vehicles for self-driving cars, healthcare for medical image analysis and disease diagnosis, finance for fraud detection and algorithmic trading, and even in entertainment for recommendation systems on streaming platforms. These real-world applications highlight the versatility and transformative potential of deep learning technologies in solving complex problems and enhancing efficiency in diverse fields.

How has Michael Nielsen’s work influenced the field of artificial intelligence research?

Michael Nielsen’s work on neural networks and deep learning has profoundly influenced the field of artificial intelligence research by providing a comprehensive, accessible resource that demystifies complex concepts and techniques. His book, “Neural Networks and Deep Learning,” offers clear explanations and practical examples, making it easier for both novices and experts to understand and apply these advanced methods. By elucidating key principles such as gradient descent, backpropagation, and regularisation, Nielsen has equipped researchers with the foundational knowledge necessary to push the boundaries of AI. Furthermore, his emphasis on ethical considerations encourages a holistic approach to AI development, ensuring that technological advancements are balanced with responsible practices. As a result, Nielsen’s contributions have not only accelerated academic progress but have also inspired innovation across various industries where AI is increasingly becoming integral.

Are there ethical considerations associated with the use of neural networks and deep learning?

Yes, there are significant ethical considerations associated with the use of neural networks and deep learning, as highlighted by Michael Nielsen. One key concern is the potential for bias in AI models, which can arise from biased training data and lead to unfair or discriminatory outcomes. Additionally, issues surrounding privacy and data security are paramount, given that neural networks often require vast amounts of personal data to function effectively. The opacity of deep learning models, sometimes referred to as the “black box” problem, also raises questions about accountability and transparency in decision-making processes. As these technologies become increasingly integrated into various aspects of society, it is crucial to address these ethical challenges to ensure that AI systems are used responsibly and equitably.